-

ElasticSearch Portal

$299.00 -

Cloud Networking Backbone

$142.00 -

Distributed Cloud Desktop

$34.99 -

Domains & Gateways

$12.99 -

RPM Cloud Server

$39.99 -

Enterprise Workmail

$24.99 -

Chat Bot Module for Customer Support

$34.99 -

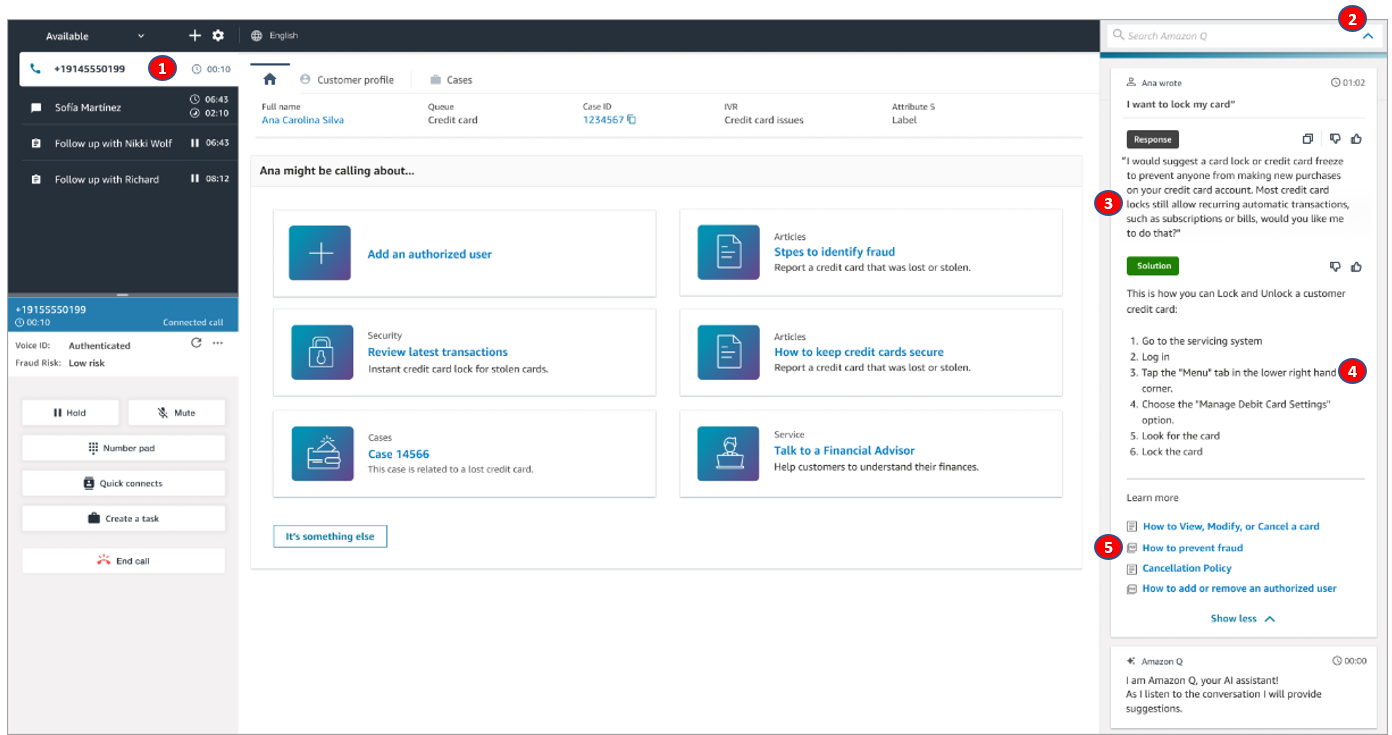

Contact Center Prime with Customer Sentiment Analysis

$79.99 -

Standard Contact Center

$39.99 -

Growth Contact Center with SMS

$61.99

Modern web application authentication and authorization with Amazon VPC Lattice

When building API-based web applications in the cloud, there are two main types of communication flow in which identity is an integral consideration:

To design an authentication and authorization solution for these flows, you need to add an extra dimension to each flow:

In each flow, a user or a service must present some kind of credential to the application service so that it can determine whether the flow should be permitted. The credentials are often accompanied with other metadata that can then be used to make further access control decisions.

If your application already has client authentication, such as a web application using OpenID Connect (OIDC), you can still use the sample code to see how implementation of secure service-to-service flows can be implemented with VPC Lattice.

For a web application, particularly those that are API based and comprised of multiple components, VPC Lattice is a great fit. With VPC Lattice, you can use native AWS identity features for credential distribution and access control, without the operational overhead that many application security solutions require.

For this example, the web application is constructed from multiple API endpoints. These are typical REST APIs, which provide API connectivity to various application components.

VPC Lattice doesn’t support OAuth2 client or inspection functionality, however it can verify HTTP header contents. This means you can use header matching within a VPC Lattice service policy to grant access to a VPC Lattice service only if the correct header is included. By generating the header based on validation occurring prior to entering the service network, we can use context about the user at the service network or service to make access control decisions.

Figure 1: User-to-service flow

The solution uses Envoy, to terminate the HTTP request from an OAuth 2.0 client. This is shown in Figure 1: User-to-service flow.

Figure 2: JWT Scope to HTTP headers

By adding an authorization policy that permits access only from Envoy (through validating the Envoy SigV4 signature) and only with the correct scopes provided in HTTP headers, you can effectively lock down a VPC Lattice service to specific verified users coming from Envoy who are presenting specific OAuth2 scopes in their bearer token.

To answer the original question of where the identity comes from, the identity is provided by the user when communicating with their identity provider (IdP). In addition to this, Envoy is presenting its own identity from its underlying compute to enter the VPC Lattice service network. From a configuration perspective this means your user-to-service communication flow doesn’t require understanding of the user, or the storage of user or machine credentials.

The sample code provided shows a full Envoy configuration for VPC Lattice, including SigV4 signing, access token validation, and extraction of JWT contents to headers. This reference architecture supports various clients including server-side web applications, thick Java clients, and even command line interface-based clients calling the APIs directly. I don’t cover OAuth clients in detail in this post, however the optional sample code allows you to use an OAuth client and flow to talk to the APIs through Envoy.

In the service-to-service flow, you need a way to provide AWS credentials to your applications and configure them to use SigV4 to sign their HTTP requests to the destination VPC Lattice services. Your application components can have their own identities (IAM roles), which allows you to uniquely identify application components and make access control decisions based on the particular flow required. For example, application component 1 might need to communicate with application component 2, but not application component 3.

Figure 3: Service-to-service flow

This design uses a service network auth policy that permits access to the service network by specific IAM principals. This can be used as a guardrail to provide overall access control over the service network and underlying services. Removal of an individual service auth policy will still enforce the service network policy first, so you can have confidence that you can identify sources of network traffic into the service network and block traffic that doesn’t come from a previously defined AWS principal.

The preceding auth policy example grants permissions to any authenticated request that uses one of the IAM roles app1TaskRole, app2TaskRole, app3TaskRole or EnvoyFrontendTaskRole to make requests to the services attached to the service network. You will see in the next section how service auth policies can be used in conjunction with service network auth policies.

Individual VPC Lattice services can have their own policies defined and implemented independently of the service network policy. This design uses a service policy to demonstrate both user-to-service and service-to-service access control.

The preceding auth policy is an example that could be attached to the app1 VPC Lattice service. The policy contains two statements:

As with a standard IAM policy, there is an implicit deny, meaning no other principals will be permitted access.

The caller principals are identified by VPC Lattice through the SigV4 signing process. This means by using the identities provisioned to the underlying compute the network flow can be associated with a service identity, which can then be authorized by VPC Lattice service access policies.

This model of access control supports a distributed development and operational model. Because the service network auth policy is decoupled from the service auth policies, the service auth policies can be iterated upon by a development team without impacting the overall policy controls set by an operations team for the entire service network.

Figure 4: CDK deployable solution

The AWS CDK solution deploys four Amazon ECS services, one for the frontend Envoy server for the client-to-service flow, and the remaining three for the backend application components. Figure 4 shows the solution when deployed with the internal domain parameter application.internal.

Backend application components are a simple node.js express server, which will print the contents of your request in JSON format and perform service-to-service calls.

A number of other infrastructure components are deployed to support the solution:

The code for Envoy and the application components can be found in the lattice_soln/containers directory.

AWS CDK code for all other deployable infrastructure can be found in lattice_soln/lattice_soln_stack.py.

Before you begin, you must have the following prerequisites in place:

This solution has been tested using Okta, however any OAuth compatible provider will work if it can issue access tokens and you can retrieve them from the command line.

The following instructions describe the configuration process for Okta using the Okta web UI. This allows you to use the device code flow to retrieve access tokens, which can then be validated by the Envoy frontend deployment.

During the API Integration step, you should have collected the audience, JWKS URI, and issuer. These fields are used on the command line when installing the CDK project with OAuth support.

If you only want to deploy the solution with service-to-service flows, you can deploy with a CDK command similar to the following:

To deploy the solution with OAuth functionality, you must provide the following parameters:

The solution can be deployed with a CDK command as follows:

For this solution, network access to the web application is secured through two main controls:

The Envoy configuration strips any x- headers coming from user clients and replaces them with x-jwt-subject and x-jwt-scope headers based on successful JWT validation. You are then able to match these x-jwt-* headers in VPC Lattice policy conditions.

This solution implements TLS endpoints on VPC Lattice and Application Load Balancers. The container instances do not implement TLS in order to reduce cost for this example. As such, traffic is in cleartext between the Application Load Balancers and container instances, and can be implemented separately if required.

In these examples, I’ve configured the domain during the CDK installation as application.internal, which will be used for communicating with the application as a client. If you change this, adjust your command lines to match.

[Optional] For examples 3 and 4, you need an access token from your OAuth provider. In each of the examples, I’ve embedded the access token in the AT environment variable for brevity.

For these first two examples, you must sign in to the container host and run a command in your container. This is because the VPC Lattice policies allow traffic from the containers. I’ve assigned IAM task roles to each container, which are used to uniquely identify them to VPC Lattice when making service-to-service calls.

To set up service-to service calls (permitted):

Figure 5: Cluster console

Figure 6: Container instances

Figure 7: Single container instance

The policy statements permit app2 to call app1. By using the path app2/call-to-app1, you can force this call to occur.

Test this with the following commands:

You should see the following output:

The policy statements don’t permit app2 to call app3. You can simulate this in the same way and verify that the access isn’t permitted by VPC Lattice.

To set up service-to-service calls (denied)

You can change the curl command from Example 1 to test app2 calling app3.

If you’ve deployed using OAuth functionality, you can test from the shell in Example 1 that you’re unable to access the frontend Envoy server (application.internal) without a valid access token, and that you’re also unable to access the backend VPC Lattice services (app1.application.internal, app2.application.internal, app3.application.internal) directly.

You can also verify that you cannot bypass the VPC Lattice service and connect to the load balancer or web server container directly.

If you’ve deployed using OAuth functionality, you can test from the shell in Example 1 to access the application with a valid access token. A client can reach each application component by using application.internal/<componentname>. For example, application.internal/app2. If no component name is specified, it will default to app1.

This will fail when attempting to connect to app3 using Envoy, as we’ve denied user to service calls on the VPC Lattice Service policy

You’ve seen how you can use VPC Lattice to provide authentication and authorization to both user-to-service and service-to-service flows. I’ve shown you how to implement some novel and reusable solution components:

All of this is created almost entirely with managed AWS services, meaning you can focus more on security policy creation and validation and less on managing components such as service identities, service meshes, and other self-managed infrastructure.

Some ways you can extend upon this solution include:

I look forward to hearing about how you use this solution and VPC Lattice to secure your own applications!

Nigel is a Security, Risk, and Compliance consultant for AWS ProServe. He’s an identity nerd who enjoys solving tricky security and identity problems for some of our biggest customers in the Asia Pacific Region. He has two cavoodles, Rocky and Chai, who love to interrupt his work calls, and he also spends his free time carving cool things on his CNC machine.

Anything we Missed?

- Home ,

- Shop ,

- Cart ,

- Checkout ,

- My account

PO Box 4942 Greenville, SC 29609

Infrastructure Security News

-

AWS recognized as an Overall Leader in 2024 KuppingerCole Leadership Compass for Policy Based Access Management

Figure 1: KuppingerCole Leadership Compass for Policy Based Access Management The repo...Read more -

Modern web application authentication and authorization with Amazon VPC Lattice

When building API-based web applications in the cloud, there are two main types of comm...Read more -

Enable multi-admin support to manage security policies at scale with AWS Firewall Manager

These are some of the use cases and challenges faced by large enterprise organizations ...Read more -

AWS re:Invent 2023: Security, identity, and compliance recap

At re:Invent 2023, and throughout the AWS security service announcements, there are key...Read more -

AWS HITRUST Shared Responsibility Matrix for HITRUST CSF v11.2 now available

SRM version 1.4.2 adds support for the HITRUST Common Security Framework (CSF) v11.2 as...Read more -

AWS completes the 2023 South Korea CSP Safety Assessment Program

The audit scope of the 2023 assessment covered data center facilities in four Availabil...Read more -

AWS Customer Compliance Guides now publicly available

CCGs offer security guidance mapped to 16 different compliance frameworks for more...Read more -

How to migrate your on-premises domain to AWS Managed Microsoft AD using ADMT

February 2, 2024: We’ve updated this post to fix broken links and added a note on migra...Read more -

How to automate rule management for AWS Network Firewall

For this walkthrough, the following prerequisites must be met: Figure 1 describes how ...Read more -

2023 C5 Type 2 attestation report available, including two new Regions and 170 services in scope

AWS has added the following 16 services to the current C5 scope: AWS strives to contin...Read more -

How to enforce creation of roles in a specific path: Use IAM role naming in hierarchy models

A fundamental benefit of using paths is the establishment of a clear and organized orga...Read more -

Latest PCI DSS v4.0 compliance package available in AWS Artifact

Want more AWS Security news? Follow us on Twitter. Nivetha is a Security Assurance Man...Read more -

SaaS access control using Amazon Verified Permissions with a per-tenant policy store

Access control is essential for multi-tenant software as a service (SaaS) applications....Read more -

Identify Java nested dependencies with Amazon Inspector SBOM Generator

Java archive files (JAR, WAR, and EAR) are widely used for packaging Java applications ...Read more -

How AWS can help you navigate the complexity of digital sovereignty

Digital sovereignty means different things to different people, and every country or re...Read more -

Data masking and granular access control using Amazon Macie and AWS Lake Formation

Companies have been collecting user data to offer new products, recommend options more ...Read more -

Export a Software Bill of Materials using Amazon Inspector

Customers have asked us to provide additional software application inventory collected ...Read more -

2023 PiTuKri ISAE 3000 Type II attestation report available with 171 services in scope

The following are the 17 additional services now in scope for the 2023 Pitukri report: ...Read more -

AWS completes the first cloud audit by the Ingelheim Kreis Initiative Joint Audits group for the pharmaceutical and life sciences sector

As customers embrace the scalability and flexibility of AWS, we’re helping them evolve ...Read more -

AWS renews K-ISMS certificate for the AWS Asia Pacific (Seoul) Region

This certification helps enterprises and organizations across South Korea, regardless o...Read more

© Cloud Level | All rights reserved | made on a by