-

ElasticSearch Portal

$299.00 -

Cloud Networking Backbone

$142.00 -

Distributed Cloud Desktop

$34.99 -

Domains & Gateways

$12.99 -

RPM Cloud Server

$39.99 -

Enterprise Workmail

$24.99 -

Chat Bot Module for Customer Support

$34.99 -

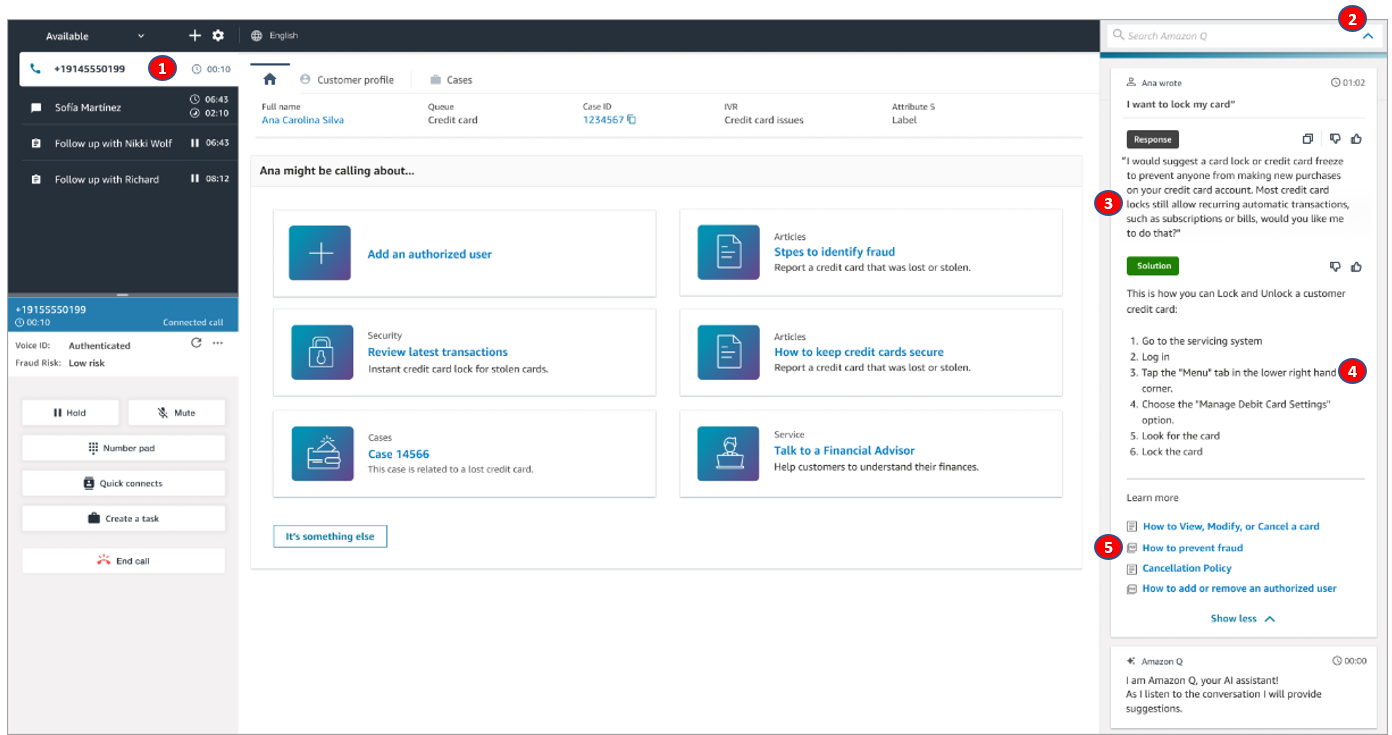

Contact Center Prime with Customer Sentiment Analysis

$79.99 -

Standard Contact Center

$39.99 -

Growth Contact Center with SMS

$61.99

Data masking and granular access control using Amazon Macie and AWS Lake Formation

Companies have been collecting user data to offer new products, recommend options more relevant to the user’s profile, or, in the case of financial institutions, to be able to facilitate access to higher credit lines or lower interest rates. However, personal data is sensitive as its use enables identification of the person using a specific system or application and in the wrong hands, this data might be used in unauthorized ways. Governments and organizations have created laws and regulations, such as General Data Protection Regulation (GDPR) in the EU, General Data Protection Law (LGPD) in Brazil, and technical guidance such as the Cloud Computing Implementation Guide published by the Association of Banks in Singapore (ABS), that specify what constitutes sensitive data and how companies should manage it. A common requirement is to ensure that consent is obtained for collection and use of personal data and that any data collected is anonymized to protect consumers from data breach risks.

In this blog post, we walk you through a proposed architecture that implements data anonymization by using granular access controls according to well-defined rules. It covers a scenario where a user might not have read access to data, but an application does. A common use case for this scenario is a data scientist working with sensitive data to train machine learning models. The training algorithm would have access to the data, but the data scientist would not. This approach helps reduce the risk of data leakage while enabling innovation using data.

We use the Faker library to create dummy data consisting of the following tables:

This architecture allows multiple data sources to send information to the data lake environment on AWS, where Amazon S3 is the central data store. After the data is stored in an S3 bucket, Macie analyzes the objects and identifies sensitive data using machine learning (ML) and pattern matching. AWS Glue then uses the information to run a workflow to anonymize the data.

Figure 1: Solution architecture for data ingestion and identification of PII

We will describe two techniques used in the process: data masking and data encryption. After the workflow runs, the data is stored in a separate S3 bucket. This hierarchy of buckets is used to segregate access to data for different user personas.

Figure 1 depicts the solution architecture:

If a user lacks permission to view sensitive data but needs to access it for machine learning model training purposes, AWS KMS can be used. The AWS KMS service manages the encryption keys that are used for data masking and to give access to the training algorithms. Users can see the masked data, but the algorithms can use the data in its original form to train the machine learning models.

This solution uses three personas:

secure-lf-admin: Data lake administrator. Responsible for configuring the data lake and assigning permissions to data administrators.

secure-lf-business-analyst: Business analyst. No access to certain confidential information.

secure-lf-data-scientist: Data scientist. No access to certain confidential information.

Choose the following Launch Stack button to deploy the CloudFormation template.

To deploy the CloudFormation template and create the resources in your AWS account, follow the steps below.

Figure 2: CloudFormation create stack screen

Figure 3: List of parameters and values in the CloudFormation stack

The deployment process should take approximately 15 minutes to finish.

To extract the data from the Amazon RDS instance, you must run an AWS DMS task. This makes the data available to Macie in an S3 bucket in Parquet format.

When the status changes to Load Complete, you will be able to see the migrated data in the target bucket (dcp-macie-<AWS_REGION>-<ACCOUNT_ID>) in the dataset folder. Within each prefix there will be a parquet file that follows the naming pattern: LOAD00000001.parquet. After this step, use Macie to scan the data for sensitive information in the files.

You must create a data classification job before you can evaluate the contents of the bucket. The job you create will run and evaluate the full contents of your S3 bucket to determine the files stored in the bucket contain PII. This job uses the managed identifiers available in Macie and a custom identifier.

Figure 4: List of Macie findings detected by the solution

The amount of data being scanned directly influences how long the job takes to run. You can choose the Update button at the top of the screen, as shown in Figure 4, to see the updated status of the job. This job, based on the size of the test dataset, will take about 10 minutes to complete.

After the Macie job is finished, the discovery results are ingested into the bucket dcp-glue-<AWS_REGION>-<ACCOUNT_ID>, invoking the AWS Glue step of the workflow (dcp-Workflow), which should take approximately 11 minutes to complete.

To check the workflow progress:

The AWS Glue job, which is launched as part of the workflow (dcp-workflow), reads the Macie findings to know the exact location of sensitive data. For example, in the customer table are name and birthdate. In the bank table are account_number, iban, and bban. And in the card table are card_number, card_expiration, and card_security_code. After this data is found, the job masks and encrypts the information.

Text encryption is done using an AWS KMS key. Here is the code snippet that provides this functionality:

If your application requires access to the unencrypted text, and because access to the AWS KMS encryption key exists, you can use the following excerpt example to access the information:

After performing all the above steps, the datasets are fully anonymized with tables created in Data Catalog and data stored in the respective S3 buckets. These are the buckets where fine-grained access controls are applied through Lake Formation:

Now that the tables are defined, you refine the permissions using Lake Formation.

After the data is processed and stored, you use Lake Formation to define and enforce fine-grained access permissions and provide secure access to data analysts and data scientists.

Figure 5: Lake Formation deployment process

Before you grant permissions to different user personas, you must register Amazon S3 locations in Lake Formation so that the personas can access the data. All buckets have been created with the following pattern <prefix>-<bucket_name>-<aws_region>-<account_id>, where <prefix> matches the prefix you selected when you deployed the Cloudformation template and <aws_region> corresponds to the selected AWS Region (for example, ap-southeast-1), and <account_id> is the 12 numbers that match your AWS account (for example, 123456789012). For ease of reading, we left only the initial part of the bucket name in the following instructions.

Figure 6: Amazon S3 locations registered in the solution

You’re now ready to grant access to different users.

After successfully deploying the AWS services described in the CloudFormation template, you must configure access to resources that are part of the proposed solution.

Before proceeding you must sign in as the secure-lf-admin user. To do this, sign out from the AWS console and sign in again using the secure-lf-admin credential and password that you set in the CloudFormation template.

Now that you’re signed in as the user who administers the data lake, you can grant read-only access to all tables in the dataset database to the secure-lf-admin user.

You can confirm your user permissions on the Data Lake permissions page.

Return to the Lake Formation console to define tag-based access control for users. You can assign policy tags to Data Catalog resources (databases, tables, and columns) to control access to this type of resources. Only users who receive the corresponding Lake Formation tag (and those who receive access with the resource method named) can access the resources.

Now grant read-only access to the masked data to the secure-lf-data-scientist user.

Figure 7: Creating resource tags for Lake Formation

Figure 8: Database and table permissions granted

To complete the process and give the secure-lf-data-scientist user access to the dataset_masked database, you must assign the tag you created to the database.

Now grant the secure-lf-business-analyst user read-only access to certain encrypted columns using column-based permissions.

Now give the secure-lf-business-analyst user access to the Customer table, except for the username column.

Figure 9: Granting access to secure-lf-business-analyst user in the Customer table

Now give the secure-lf-business-analyst user access to the table Card, only for columns that do not contain PII information.

Figure 10: Editing tags in Lake Formation tables

In this step you add the segment tag in the column cred_card_provider to the card table. For the user secure-lf-business-analyst to have access, you need to configure this tag for the user.

Figure 11: Configure tag-based access for user secure-lf-business-analyst

The next step is to revoke Super access to the IAMAllowedPrincipals group.

The IAMAllowedPrincipals group includes all IAM users and roles that are allowed access to Data Catalog resources using IAM policies. The Super permission allows a principal to perform all operations supported by Lake Formation on the database or table on which it is granted. These settings provide access to Data Catalog resources and Amazon S3 locations controlled exclusively by IAM policies. Therefore, the individual permissions configured by Lake Formation are not considered, so you will remove the concessions already configured by the IAMAllowedPrincipals group, leaving only the Lake Formation settings.

Repeat the same steps for the dataset_encrypted and dataset_masked databases.

Figure 12: Revoke SUPER access to the IAMAllowedPrincipals group

You can confirm all user permissions on the Data Permissions page.

To validate the permissions of different personas, you use Athena to query the Amazon S3 data lake.

Ensure the query result location has been created as part of the CloudFormation stack (secure-athena-query-<ACCOUNT_ID>-<AWS_REGION>).

The secure-lf-admin user should see all tables in the dataset database and dcp. As for the banks dataset_encrypted and dataset_masked, the user should not have access to the tables.

Figure 13: Athena console with query results in clear text

Finally, validate the secure-lf-data-scientist permissions.

The user secure-lf-data-scientist will only be able to view all the columns in the database dataset_masked.

Figure 14: Athena query results with masked data

Now, validate the secure-lf-business-analyst user permissions.

Figure 15: Validating secure-lf-business-analyst user permissions to query data

The user secure-lf-business-analyst should only be able to view the card and customer tables of the dataset_encrypted database. In the table card, you will only have access to the cred_card_provider column and in the table Customer, you will have access only in the username, mail, and sex columns, as previously configured in Lake Formation.

After testing the solution, remove the resources you created to avoid unnecessary expenses.

Now that the solution is implemented, you have an automated anonymization dataflow. This solution demonstrates how you can build a solution using AWS serverless solutions where you only pay for what you use and without worrying about infrastructure provisioning. In addition, this solution is customizable to meet other data protection requirements such as General Data Protection Law (LGPD) in Brazil, General Data Protection Regulation in Europe (GDPR), and the Association of Banks in Singapore (ABS) Cloud Computing Implementation Guide.

We used Macie to identify the sensitive data stored in Amazon S3 and AWS Glue to generate Macie reports to anonymize the sensitive data found. Finally, we used Lake Formation to implement fine-grained data access control to specific information and demonstrated how you can programmatically grant access to applications that need to work with unmasked data.

Want more AWS Security news? Follow us on Twitter.

Iris is a solutions architect at AWS, supporting clients in their innovation and digital transformation journeys in the cloud. In her free time, she enjoys going to the beach, traveling, hiking and always being in contact with nature.

Paulo is a Principal Solutions Architect and supports clients in the financial sector to tread the new world of DeFi, web3.0, Blockchain, dApps, and Smart Contracts. In addition, he has extensive experience in high performance computing (HPC) and machine learning. Passionate about music and diving, he devours books, plays World of Warcraft and New World, and cooks for friends.

Leo is a Principal Security Solutions Architect at AWS and uses his knowledge to help customers better use cloud services and technologies securely. Over the years, he had the opportunity to work in large, complex environments, designing, architecting, and implementing highly scalable and secure solutions to global companies. He is passionate about football, BBQ, and Jiu Jitsu — the Brazilian version of them all.

Anything we Missed?

- Home ,

- Shop ,

- Cart ,

- Checkout ,

- My account

PO Box 4942 Greenville, SC 29609

Infrastructure Security News

-

AWS recognized as an Overall Leader in 2024 KuppingerCole Leadership Compass for Policy Based Access Management

Figure 1: KuppingerCole Leadership Compass for Policy Based Access Management The repo...Read more -

Modern web application authentication and authorization with Amazon VPC Lattice

When building API-based web applications in the cloud, there are two main types of comm...Read more -

Enable multi-admin support to manage security policies at scale with AWS Firewall Manager

These are some of the use cases and challenges faced by large enterprise organizations ...Read more -

AWS re:Invent 2023: Security, identity, and compliance recap

At re:Invent 2023, and throughout the AWS security service announcements, there are key...Read more -

AWS HITRUST Shared Responsibility Matrix for HITRUST CSF v11.2 now available

SRM version 1.4.2 adds support for the HITRUST Common Security Framework (CSF) v11.2 as...Read more -

AWS completes the 2023 South Korea CSP Safety Assessment Program

The audit scope of the 2023 assessment covered data center facilities in four Availabil...Read more -

AWS Customer Compliance Guides now publicly available

CCGs offer security guidance mapped to 16 different compliance frameworks for more...Read more -

How to migrate your on-premises domain to AWS Managed Microsoft AD using ADMT

February 2, 2024: We’ve updated this post to fix broken links and added a note on migra...Read more -

How to automate rule management for AWS Network Firewall

For this walkthrough, the following prerequisites must be met: Figure 1 describes how ...Read more -

2023 C5 Type 2 attestation report available, including two new Regions and 170 services in scope

AWS has added the following 16 services to the current C5 scope: AWS strives to contin...Read more -

How to enforce creation of roles in a specific path: Use IAM role naming in hierarchy models

A fundamental benefit of using paths is the establishment of a clear and organized orga...Read more -

Latest PCI DSS v4.0 compliance package available in AWS Artifact

Want more AWS Security news? Follow us on Twitter. Nivetha is a Security Assurance Man...Read more -

SaaS access control using Amazon Verified Permissions with a per-tenant policy store

Access control is essential for multi-tenant software as a service (SaaS) applications....Read more -

Identify Java nested dependencies with Amazon Inspector SBOM Generator

Java archive files (JAR, WAR, and EAR) are widely used for packaging Java applications ...Read more -

How AWS can help you navigate the complexity of digital sovereignty

Digital sovereignty means different things to different people, and every country or re...Read more -

Data masking and granular access control using Amazon Macie and AWS Lake Formation

Companies have been collecting user data to offer new products, recommend options more ...Read more -

Export a Software Bill of Materials using Amazon Inspector

Customers have asked us to provide additional software application inventory collected ...Read more -

2023 PiTuKri ISAE 3000 Type II attestation report available with 171 services in scope

The following are the 17 additional services now in scope for the 2023 Pitukri report: ...Read more -

AWS completes the first cloud audit by the Ingelheim Kreis Initiative Joint Audits group for the pharmaceutical and life sciences sector

As customers embrace the scalability and flexibility of AWS, we’re helping them evolve ...Read more -

AWS renews K-ISMS certificate for the AWS Asia Pacific (Seoul) Region

This certification helps enterprises and organizations across South Korea, regardless o...Read more

© Cloud Level | All rights reserved | made on a by